Adversarial attacks on an ai system – As adversarial attacks on AI systems take center stage, we embark on a journey to unravel their complexities. From poisoning attacks that corrupt data to evasion attacks that trick models, we delve into the methods and consequences of these malicious assaults.

Uncover the ethical implications and explore techniques for defending against these threats, ensuring the integrity and reliability of AI systems in our increasingly data-driven world.

Definition of Adversarial Attacks on AI Systems

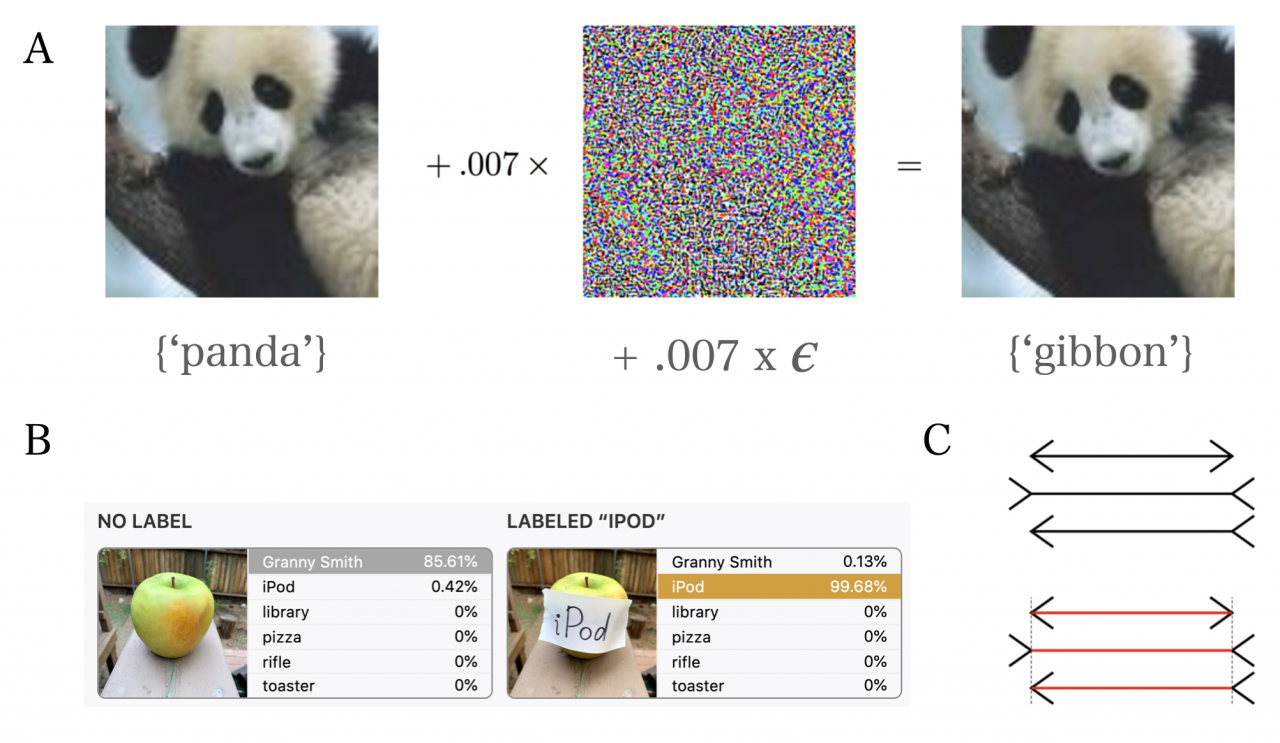

Adversarial attacks are deliberate attempts to manipulate the behavior of AI systems by introducing carefully crafted inputs designed to exploit vulnerabilities in the system’s architecture or learning process. These attacks aim to mislead or compromise the system, leading to incorrect predictions or harmful actions.

Examples of adversarial attacks include:

- Creating images that are misclassified by image recognition systems.

- Crafting text inputs that fool spam filters.

- Generating audio samples that bypass speech recognition systems.

Types of Adversarial Attacks

Adversarial attacks can be classified into different types based on their goals and methods:

Poisoning Attacks

Poisoning attacks involve manipulating the training data of an AI system to introduce bias or errors into the model. This can be done by adding malicious data points, modifying existing data, or removing legitimate data.

Evasion Attacks

Evasion attacks aim to craft inputs that are misclassified by the AI system. These inputs are typically carefully crafted to exploit specific vulnerabilities in the system’s architecture or learning process.

Model Extraction Attacks

Model extraction attacks attempt to extract the underlying model parameters or structure from an AI system. This information can be used to understand the system’s behavior or to create new attacks.

Defending against adversarial attacks on an AI system can be like trying to add a radiator to an existing system – it’s not always easy. Just as adding a radiator requires careful planning and execution, so too does defending against adversarial attacks.

However, by following best practices and staying up-to-date on the latest research, you can make your AI system more resilient to these attacks.

Methods for Launching Adversarial Attacks

There are several common methods used to launch adversarial attacks:

Gradient-based Methods

Gradient-based methods use the gradients of the AI system’s output with respect to the input to generate adversarial examples. These methods are typically efficient and can be used to generate attacks against a wide range of AI systems.

Evolutionary Algorithms

Evolutionary algorithms use evolutionary techniques to generate adversarial examples. These methods are typically more computationally expensive than gradient-based methods, but they can be used to generate more effective attacks against complex AI systems.

Generative Adversarial Networks (GANs)

GANs are a type of deep learning model that can be used to generate adversarial examples. GANs are typically more computationally expensive than other methods, but they can be used to generate more realistic and effective attacks.

Impact of Adversarial Attacks on AI Systems

Adversarial attacks can have a significant impact on AI systems in various domains, including:

Healthcare

Adversarial attacks can be used to compromise medical diagnosis systems, leading to misdiagnosis and incorrect treatment decisions.

Adversarial attacks on AI systems are like hackers trying to trick your phone into thinking it’s a toaster. But hey, did you know that, a computer can run without an operating system ? Just like your car can run without a steering wheel, but it’s not gonna be a smooth ride.

And just like that, attacking AI systems without an operating system is a whole different ball game.

Finance

Adversarial attacks can be used to manipulate financial trading systems, leading to financial losses.

Adversarial attacks on AI systems have emerged as a significant challenge in recent times. These attacks aim to manipulate or fool AI models into making incorrect predictions or classifications. The ABO blood group system in humans, which categorizes individuals based on the presence of specific antigens on their red blood cells , provides an interesting example of how such attacks can be carried out.

By carefully crafting adversarial inputs, attackers can potentially exploit vulnerabilities in AI systems designed to identify blood types, leading to potentially dangerous consequences in healthcare settings.

Cybersecurity

Adversarial attacks can be used to bypass security systems, such as spam filters and intrusion detection systems.

Adversarial attacks on AI systems can exploit vulnerabilities in the system’s underlying operating system. Just like how an operating system manages computer hardware and software resources to perform tasks like memory management and input/output operations ( 3 purposes of an operating system ), an AI system’s operating system handles the allocation and utilization of computational resources for AI algorithms.

Therefore, understanding the vulnerabilities in an AI system’s operating system is crucial for mitigating adversarial attacks.

Techniques for Defending Against Adversarial Attacks

There are several techniques that can be used to defend against adversarial attacks, including:

Adversarial Training

Adversarial training involves training an AI system on a dataset that includes adversarial examples. This helps the system to become more robust to adversarial attacks.

Adversarial attacks on an AI system can be like a game of cat and mouse. The attacker tries to find a way to fool the system, while the system tries to defend itself. One way to defend against adversarial attacks is to use a unity feedback system.

A unity feedback system has an open loop transfer function , which means that the output of the system is fed back into the input. This can help to cancel out any errors that may be introduced by the attacker.

Data Augmentation, Adversarial attacks on an ai system

Data augmentation involves generating new training data from existing data using transformations such as cropping, flipping, and rotating. This helps to increase the diversity of the training data and make the system more robust to adversarial attacks.

Watch out, adversarial attacks on an AI system can be a real pain. But hey, on the bright side, at least you can optimize your supply chain with a vendor managed inventory system. Vendor managed inventory systems let you kick back and relax while the vendor takes care of your stock.

Back to the AI attacks, though – stay sharp and keep those algorithms safe!

Input Validation

Input validation involves checking the input data for errors or malicious content before it is processed by the AI system. This can help to prevent adversarial attacks from being successful.

Ethical Considerations: Adversarial Attacks On An Ai System

Adversarial attacks on AI systems raise several ethical concerns, including:

Potential for Misuse and Harm

Adversarial attacks can be used to cause harm, such as by manipulating medical diagnosis systems or financial trading systems.

Adversarial attacks on an AI system can be a major problem, but there are ways to mitigate them. One way is to make the system more robust, and another way is to use adversarial training. Adding solar panels to an existing system can also help to improve the system’s robustness, as it can provide a more reliable source of power.

This can help to prevent the system from being attacked by adversarial inputs that are designed to cause it to fail. Adding solar panels to an existing system is a relatively simple and inexpensive way to improve the system’s security, and it can also help to reduce the system’s environmental impact.

Responsibility for Developing and Deploying Robust AI Systems

Developers and deployers of AI systems have a responsibility to ensure that their systems are robust to adversarial attacks. This includes developing and deploying techniques for defending against adversarial attacks.

Final Conclusion

In the face of adversarial attacks on AI systems, vigilance is paramount. By understanding the nature of these threats and implementing robust defenses, we can safeguard the integrity of AI and ensure its responsible deployment in shaping our future.

Helpful Answers

What are adversarial attacks on AI systems?

Adversarial attacks are malicious attempts to manipulate or deceive AI models by introducing carefully crafted inputs designed to exploit vulnerabilities in the model.

What are the different types of adversarial attacks?

Common types include poisoning attacks (corrupting training data), evasion attacks (tricking models to make incorrect predictions), and model extraction attacks (stealing sensitive information from models).

How can we defend against adversarial attacks?

Techniques include adversarial training (exposing models to adversarial examples during training), data augmentation (adding diverse data to training sets), and input validation (checking inputs for suspicious patterns).